Creatively Fearless.Commercially Sharp.

Lance Montana creates brilliant brands, high-performance websites and marketing campaigns that connect with the right people, in the right place, at the right time. Based in Brisbane, Gold Coast and Sunshine Coast, we serve clients Australia wide, turning insight into impact to help businesses grow faster.

Featured Project

Dolphins NRL Campaign

See how we brought this footy-fan-driven TV commercial (above) and campaign creative to life—highlighting how City of Moreton Bay Council is proudly backing this incredible National Rugby League team and celebrating every win together. Phins for life!

Towards Brisbane 2032

With the Brisbane 2032 Olympic Games on the horizon, we’re passionate about helping Australian businesses capitalise on this once-in-a-lifetime opportunity. We’re already developing marketing campaigns and strategies for businesses across Brisbane, Gold Coast, and Sunshine Coast – if you haven’t started preparing yet, now is the time. Whether you’re tendering for contracts or want to better position your brand for growth, we’ll help you go for gold.

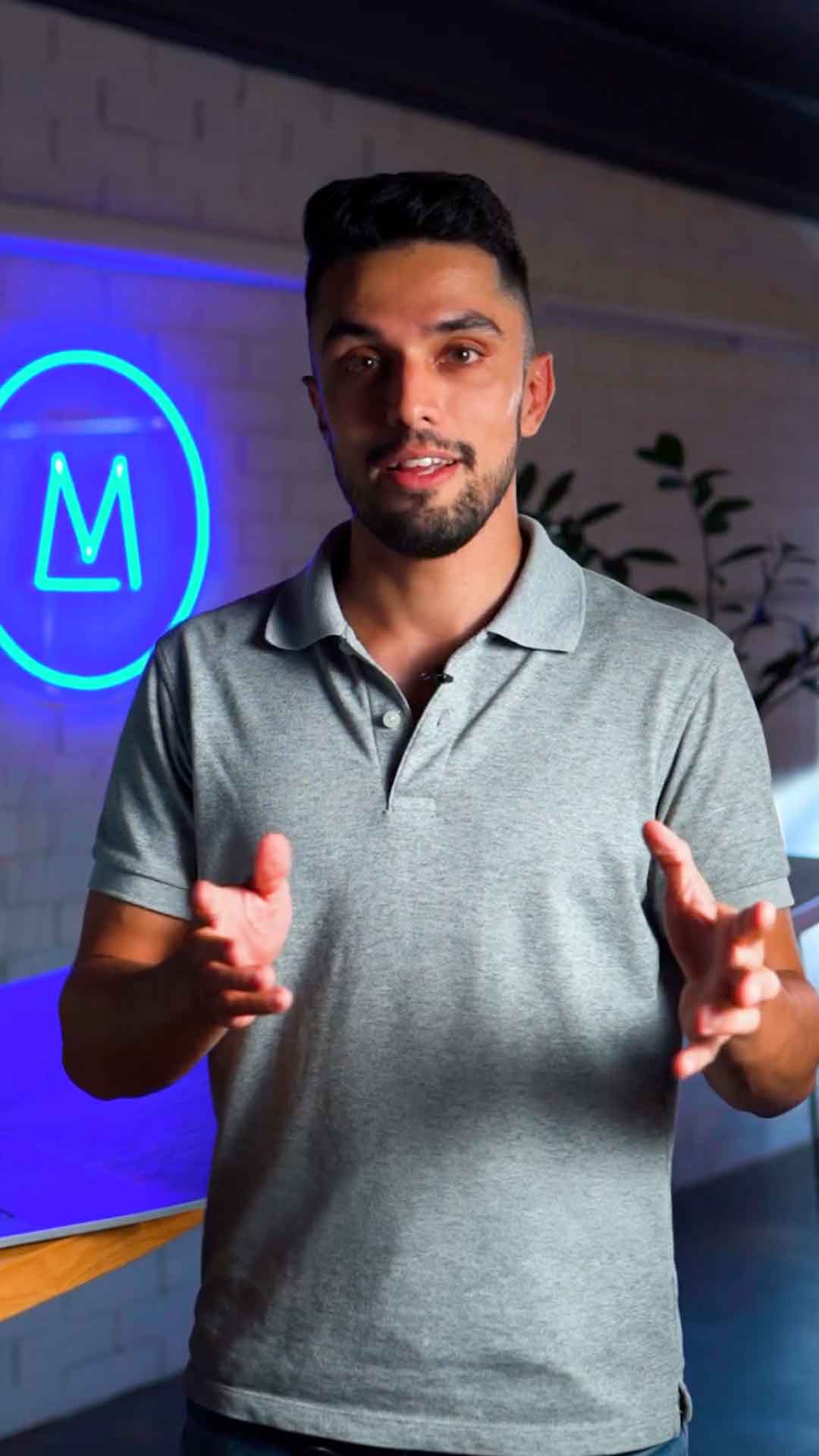

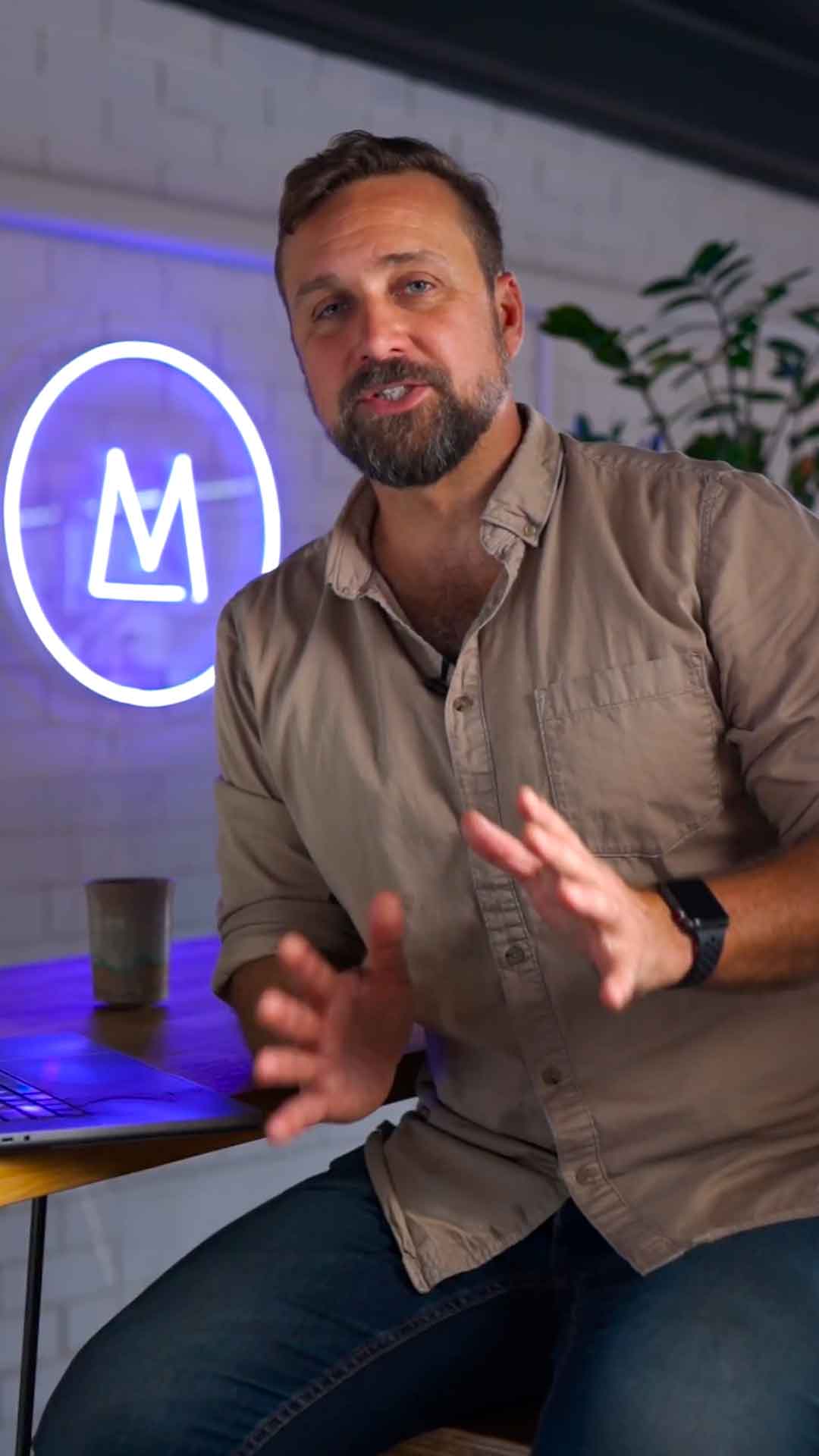

Meet the team

We are a team of specialist marketers, creatives, analysts, and web developers based in Brisbane, Gold Coast, and Sunshine Coast, with full-time remote staff in New South Wales, Victoria, and Tasmania. Whether you’re a local business in South East Queensland or a national organisation anywhere in Australia, Lance Montana is your digital marketing agency, creative agency, and web developer team rolled into one.

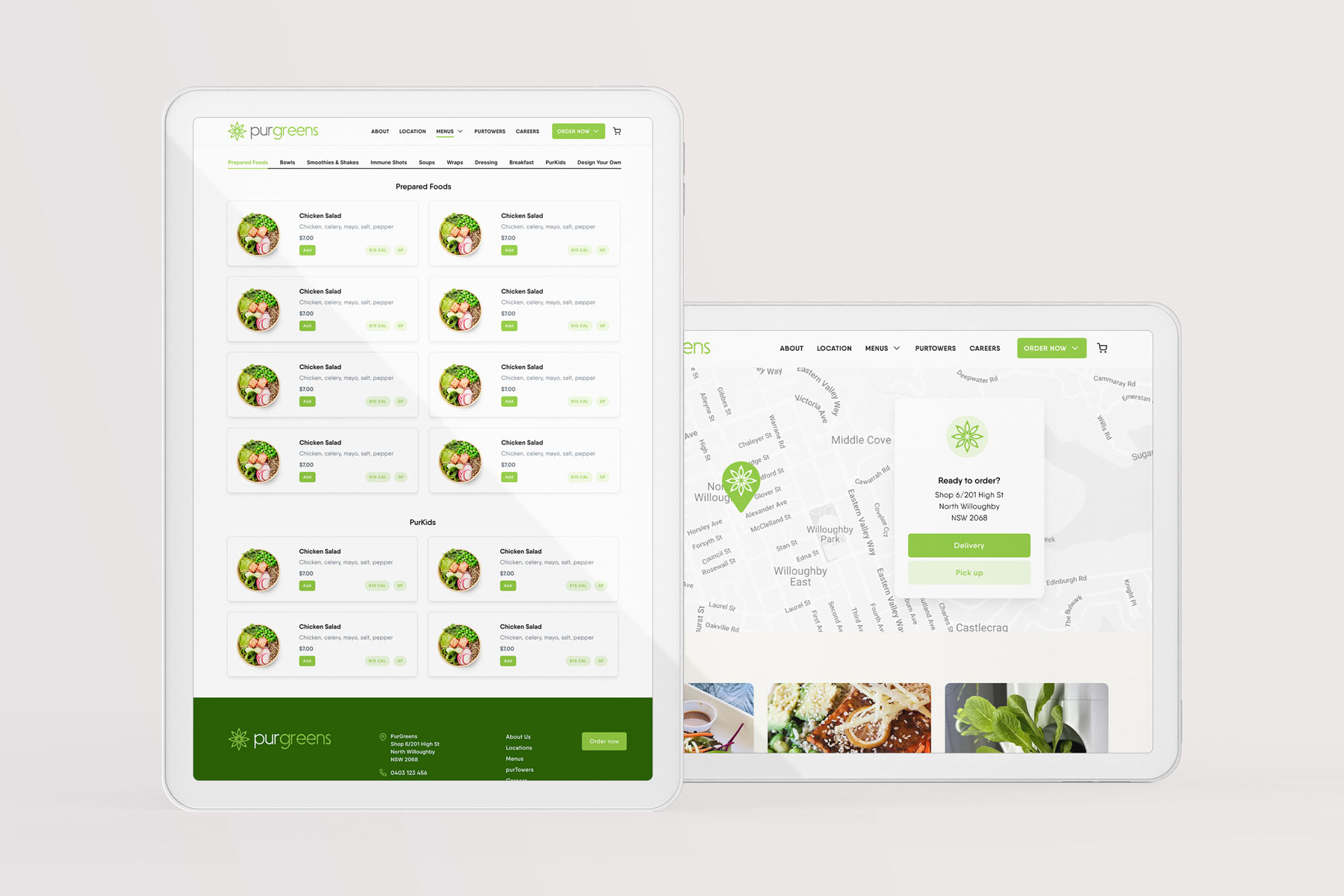

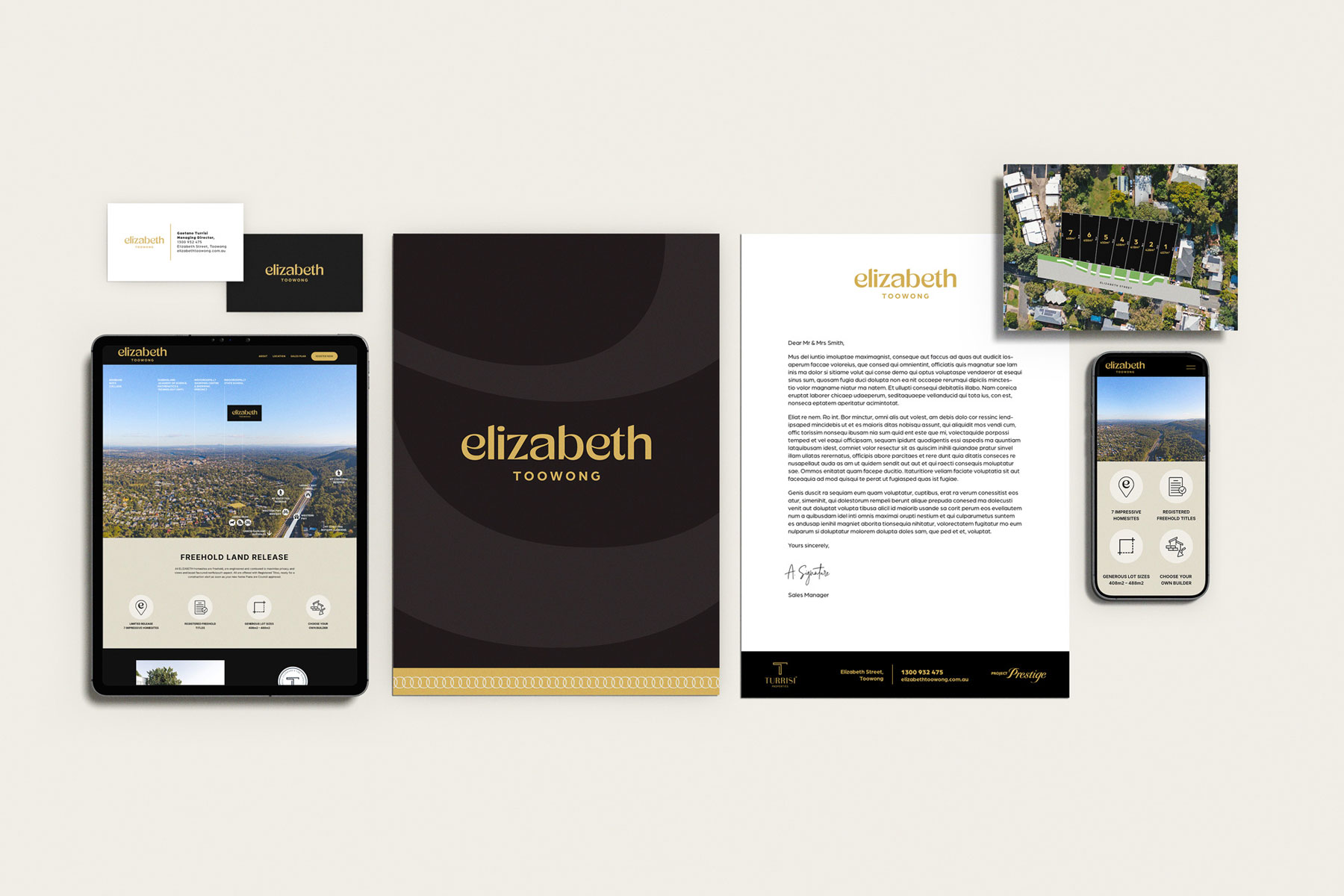

Brands we work with

Over the past decade, Lance Montana has developed rewarding, long-term partnerships with various clients across the private and public sectors.